Apple Announces Foundation Models Framework for Developers to Leverage AI

Apple at WWDC today announced Foundation Models Framework, a new API allowing third-party developers to leverage the large language models at the heart of Apple Intelligence and build it into their apps.

With the Foundation Models Framework, developers can integrate Apple's on-device models directly into apps, allowing them to build on Apple Intelligence.

"Last year, we took the first steps on a journey to bring users intelligence that's helpful, relevant, easy to use, and right where users need it, all while protecting their privacy. Now, the models that power Apple Intelligence are becoming more capable and efficient, and we're integrating features in even more places across each of our operating systems," said Craig Federighi, Apple's senior vice president of Software Engineering. "We're also taking the huge step of giving developers direct access to the on-device foundation model powering Apple Intelligence, allowing them to tap into intelligence that is powerful, fast, built with privacy, and available even when users are offline. We think this will ignite a whole new wave of intelligent experiences in the apps users rely on every day. We can't wait to see what developers create."

The Foundation Models framework lets developers build AI-powered features that work offline, protect privacy, and incur no inference costs. For example, an education app can generate quizzes from user notes on-device, and an outdoors app can offer offline natural language search.

Apple says the framework is available for testing starting today through the Apple Developer Program at developer.apple.com, and a public beta will be available through the Apple Beta Software Program next month at beta.apple.com. It includes built-in features like guided generation and tool calling for easy integration of generative capabilities into existing apps.

Popular Stories

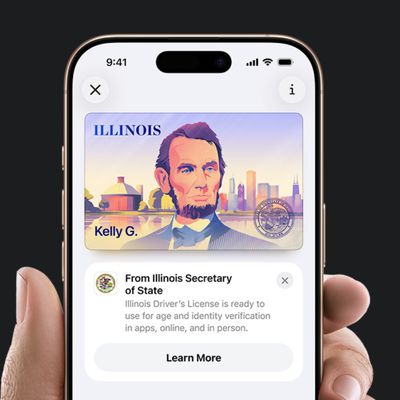

In select U.S. states, residents can add their driver's license or state ID to the Apple Wallet app on the iPhone and Apple Watch, and then use it to display proof of identity or age at select airports and businesses, and in select apps.

The feature is currently available in 13 U.S. states and Puerto Rico, and it is expected to launch in at least seven more in the future.

To set up the...

While the iPhone 18 Pro and iPhone 18 Pro Max are not expected to launch for another nine months, there are already plenty of rumors about the devices.

Below, we have recapped 12 features rumored for the iPhone 18 Pro models.

The same overall design is expected, with 6.3-inch and 6.9-inch display sizes, and a "plateau" housing three rear cameras

Under-screen Face ID

Front camera in...

Apple hasn't updated the Apple TV 4K since 2022, and 2025 was supposed to be the year that we got a refresh. There were rumors suggesting Apple would release the new Apple TV before the end of 2025, but it looks like that's not going to happen now.

Subscribe to the MacRumors YouTube channel for more videos.

Bloomberg's Mark Gurman said several times across 2024 and 2025 that Apple would...

Earlier this month, Apple released iOS 26.2, following more than a month of beta testing. It is a big update, with many new features and changes for iPhones.

iOS 26.2 adds a Liquid Glass slider for the Lock Screen's clock, offline lyrics in Apple Music, and more. Below, we have highlighted a total of eight new features.

Liquid Glass Slider on Lock Screen

A new slider in the Lock...

2026 is almost upon us, and a new year is a good time to try out some new apps. We've rounded up 10 excellent Mac apps that are worth checking out.

Subscribe to the MacRumors YouTube channel for more videos.

Alt-Tab (Free) - Alt-Tab brings a Windows-style alt + tab thumbnail preview option to the Mac. You can see a full window preview of open apps and app windows.

One Thing (Free) -...

Samsung is working on a new foldable smartphone that's wider and shorter than the models that it's released before, according to Korean news site ETNews. The "Wide Fold" will compete with Apple's iPhone Fold that's set to launch in September 2026.

Samsung's existing Galaxy Z Fold7 display is 6.5 inches when closed, and 8 inches when open, with a 21:9 aspect ratio when folded and a 20:18...

The European Commission today praised the interoperability changes that Apple is introducing in iOS 26.3, once again crediting the Digital Markets Act (DMA) with bringing "new opportunities" to European users and developers.

The Digital Markets Act requires Apple to provide third-party accessories with the same capabilities and access to device features that Apple's own products get. In iOS...

Apple is working on a foldable iPhone that's set to come out in September 2026, and rumors suggest that it will have a display that's around 5.4 inches when closed and 7.6 inches when open. Exact measurements vary based on rumors, but one 3D designer has created a mockup based on what we've heard so far.

On MakerWorld, a user named Subsy has uploaded a 1:1 iPhone Fold replica (via Macworld), ...

Apple's first foldable iPhone, rumored for release next year, may turn out to be smaller than most people imagine, if a recent report is anything to go by. According to The Information, the outer display on the book-style device will measure just 5.3 inches – that's smaller than the 5.4-inch screen on the iPhone mini, a line Apple discontinued in 2022 due to poor sales. The report has led ...

('https://www.macrumors.com/2025/06/09/foundation-models-framework/')

('https://www.macrumors.com/2025/06/09/foundation-models-framework/')